Large-scale IT product development projects are vital for business competitiveness but often face challenges due to their complexity. This guide explores strategies for managing technical complexity in such projects. It provides project managers, technical leaders, and stakeholders with tools to ensure successful outcomes. By examining proven methods and real-world examples, readers will learn to navigate complexities, avoid pitfalls, and drive innovation in IT product development.

Effective management of technical complexity requires a multifaceted approach. This guide covers key areas such as architectural design, agile methodologies, risk mitigation, and emerging technologies. By mastering these aspects, organizations can transform potential obstacles into opportunities for growth and efficiency, ultimately delivering high-value IT products that meet evolving business needs.

Understanding Technical Complexity in IT Projects

Before diving into management strategies, it’s essential to understand what technical complexity entails in the context of large-scale IT projects.

Definition of Technical Complexity

Technical complexity refers to the intricacy and interconnectedness of various system components, technologies, and processes involved in developing and maintaining a large-scale software solution. It encompasses the challenges arising from the scale, diversity, and interdependencies within the project.

Common Sources of Complexity

- Scale: As projects grow in size, the number of components, interactions, and potential failure points increases exponentially. For example, a small e-commerce platform might have a handful of microservices, but a large-scale enterprise solution could have hundreds, each with its own complexities.

- Technological Diversity: Integrating multiple technologies, programming languages, and platforms adds layers of complexity. A project might use Java for backend services, React for the frontend, and various cloud services, each requiring specific expertise and integration efforts.

- Legacy System Integration: Incorporating or interfacing with existing systems often introduces compatibility challenges. For instance, a modern cloud-based CRM system might need to integrate with a decades-old on-premise ERP system, requiring complex data mapping and synchronization.

- Changing Requirements: Evolving business needs and market conditions can lead to shifting project goals and specifications. This is particularly challenging in long-running projects where the business landscape might change significantly during development.

- Data Management: Handling large volumes of data, ensuring data integrity, and managing data flows across systems is increasingly complex. This includes challenges in data storage, processing, and analytics, especially with the rise of big data technologies.

- Security and Compliance: Meeting stringent security requirements and regulatory standards adds another dimension of complexity. This is particularly crucial in industries like finance and healthcare, where data protection regulations like GDPR or HIPAA must be strictly adhered to.

- Performance and Scalability: Designing systems that can handle high loads and scale effectively as demand grows is a significant challenge. This involves considerations like load balancing, caching strategies, and database optimization.

Strategies for Managing Technical Complexity

Now that we’ve identified the sources of complexity, let’s explore strategies to manage them effectively.

1. Robust Project Planning and Scope Management

Effective Planning for IT Projects

- Clear Project Objectives: Define SMART objectives (e.g., “Increase system throughput by 50% within 6 months”). Align goals with business objectives and involve key stakeholders.

- Breaking Down Projects: Use Work Breakdown Structure (WBS) to divide projects into manageable parts, and implement feature-driven decomposition for incremental value delivery.

- Prioritizing Features: Employ the MoSCoW method for requirements prioritization, the Kano model for customer satisfaction, and a value vs. effort matrix for quick wins.

2. Architectural Design Strategies

Managing Complexity through Architecture

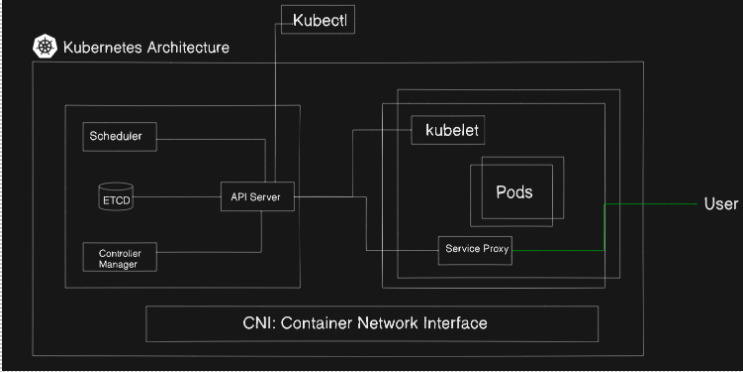

- Modular Architecture: Utilize microservices for scalability, service-oriented architecture (SOA) for flexibility, and component-based development for reusability.

- Scalability: Plan for horizontal and vertical scaling, implement load balancing, and use caching/CDNs to enhance performance.

- Integration Planning: Define clear APIs, use standard protocols, and consider API gateways for managing integrations.

3. Technology Stack Selection

Choosing Sustainable Technologies

- Evaluating Technologies: Align technologies with project goals, assess scalability, and check community support.

- Balancing Innovation: Conduct proof-of-concept testing, use a bimodal IT approach, and have fallback plans.

- Managing Technical Debt: Regularly assess debt, allocate refactoring time, and enforce coding standards.

4. Agile Development and DevOps

Enhancing Project Management

- Scaled Agile Frameworks: Consider SAFe or LeSS for large teams while maintaining project governance.

- DevOps Practices: Automate build, test, and deployment processes; implement infrastructure as code; foster collaboration between development and operations.

- Continuous Integration/Deployment (CI/CD): Set up automated pipelines, use feature flags, and apply blue-green or canary deployments.

5. Team Structure and Communication

Effective Organization and Collaboration

- Cross-Functional Teams: Form teams around specific features, implement a matrix structure, and consider the Spotify model for large organizations.

- Knowledge Sharing: Conduct tech talks, use collaborative tools, and encourage pair programming.

- Communication Strategies: Establish clear communication plans, use appropriate tools, and hold regular stand-ups and retrospectives.

6. Quality Assurance and Testing

Ensuring Thorough Testing

- Comprehensive Strategies: Implement a test pyramid, conduct regular code reviews, and perform integration testing.

- Automated Testing: Utilize CI with automated tests, set code coverage thresholds, and enforce quality gates.

- Performance and Security Testing: Conduct load testing, performance profiling, and penetration testing.

7. Documentation and Knowledge Management

Maintaining Comprehensive Documentation

- Thorough Practices: Keep architecture diagrams and system designs updated, document APIs and data models, and create user manuals.

- Knowledge Retention Tools: Use collaborative tools, implement version control, and create video tutorials for complex processes.

- Up-to-Date Documentation: Make documentation updates part of the task completion definition, conduct regular reviews, and automate API documentation generation.

8. Risk Management and Contingency Planning

Proactive Risk Management

- Identifying Risks: Conduct risk assessment workshops, prioritize risks with matrices, and consider both technical and non-technical risks.

- Mitigation Strategies: Develop prototypes for new technologies, implement redundancy, and establish continuous monitoring.

- Contingency Planning: Create disaster recovery plans, rollback procedures for deployments, and define escalation pathways for critical issues.

LogicLoom: Mastering Technical Complexity with Strategic Precision

LogicLoom stands at the forefront of managing technical complexities in today’s rapidly evolving IT landscape. With a comprehensive approach that aligns perfectly with industry-best practices, LogicLoom excels in every aspect of complexity management. From robust project planning and innovative architectural design to careful technology stack selection and agile development methodologies, LogicLoom demonstrates unparalleled expertise. Their proficiency in team structuring, quality assurance, documentation, and risk management ensures that even the most intricate projects are handled with precision and foresight. By partnering with LogicLoom, organizations gain access to a wealth of experience and a strategic mindset that transforms challenges into opportunities. Whether it’s implementing scalable solutions, fostering effective communication, or navigating the complexities of modern software development, LogicLoom proves to be an invaluable ally in achieving technological excellence and driving business success.

Tools and Techniques for Taming Complexity

Project Management Software: The Backbone of Complex IT Initiatives

In the realm of large-scale IT projects, robust project management software is indispensable. These tools serve as the central nervous system of your project, facilitating:• Task allocation and tracking

- Resource management

- Timeline visualization

- Real-time collaboration

- Progress reporting

Popular options include Jira, Microsoft Project, and Asana. When selecting a tool, consider factors such as scalability, integration capabilities, and ease of use. The right software can significantly reduce administrative overhead and improve project visibility, allowing teams to focus on tackling technical challenges.

Version Control and Configuration Management: Maintaining Order in Chaos

As project complexity increases, so does the importance of version control and configuration management. These systems are crucial for:

- Managing code changes

- Tracking software versions

- Facilitating collaboration among developers

- Ensuring consistency across environments

Git, along with platforms like GitHub or GitLab, has become the de facto standard for version control. For configuration management, tools like Ansible, Puppet, or Chef help maintain consistency across diverse IT environments.

Implementing a robust version control strategy.

- Establish clear branching and merging policies

- Implement code review processes

- Utilize feature flags for gradual rollouts.

- Automate build and deployment pipelines

Automated Testing and Continuous Integration: Ensuring Quality at Scale

In complex IT projects, manual testing quickly becomes a bottleneck. Automated testing and continuous integration (CI) are essential for maintaining quality and velocity:

- Unit tests verify individual components

- Integration tests ensure different parts work together

- End-to-end tests validate entire workflows

- Performance tests gauge system efficiency

Tools like Jenkins, CircleCI, or GitLab CI/CD can automate the build, test, and deployment processes. This approach not only catches issues early but also provides rapid feedback to developers, reducing the cost and time associated with bug fixes.

Documentation and Knowledge Management Systems: Preserving Institutional Knowledge

As projects grow in complexity, comprehensive documentation becomes critical. Effective knowledge management systems:

- Capture design decisions and rationales

- Provide up-to-date technical specifications

- Offer troubleshooting guides and FAQs

- Facilitate onboarding of new team members

Tools like Confluence, SharePoint, or specialized wiki software can serve as central repositories for project documentation. Encourage a culture of documentation by integrating it into your development workflow and recognizing contributions to the knowledge base.

Building and Managing High-Performance Teams

Roles and Responsibilities in Complex Projects

Large-scale IT projects require a diverse set of skills and clear role definitions:

- Project Manager: Oversees timeline, budget, and resources

- Technical Architect: Designs overall system structure

- Development Team Leads: Guide and mentor developers

- Quality Assurance Lead: Ensures product meets quality standards

- DevOps Engineer: Manages deployment and infrastructure

- Business Analyst: Bridges technical and business requirements

Clearly defining these roles and their interactions is crucial for smooth project execution. Consider using a RACI (Responsible, Accountable, Consulted, Informed) matrix to clarify decision-making processes and responsibilities.

Communication and Collaboration Strategies

Effective communication is the lifeblood of complex IT projects. Implement strategies such as:

- Regular stand-up meetings for quick updates

- Sprint planning and retrospective sessions

- Cross-functional team workshops

- Clear escalation paths for issues

- Collaborative tools like Slack or Microsoft Teams

Encourage open dialogue and create an environment where team members feel comfortable sharing ideas and concerns. This transparency can lead to early problem identification and innovative solutions.

Skills Development and Training

In the fast-paced world of IT, continuous learning is essential. Invest in your team’s growth through:

- Technical workshops and seminars

- Online learning platforms (e.g., Coursera, Udemy)

- Internal knowledge-sharing sessions

- Mentorship programs

- Attendance at industry conferences

By fostering a culture of learning, you not only improve the capabilities of your team but also increase motivation and retention.

Agile Methodologies for Complex Projects

Adapting Agile for Large-Scale Development

While Agile methodologies were initially designed for smaller teams, they can be adapted for large-scale projects:

- Break the project into smaller, manageable components

- Implement cross-functional teams for each component

- Maintain a product backlog at both the team and project level

- Use sprint cycles to deliver incremental value

- Conduct regular demos to stakeholders

The key is to maintain Agile principles like flexibility, continuous improvement, and customer focus while scaling to meet the needs of larger projects.

Scaling Frameworks: SAFe and LeSS

For organizations looking to implement Agile at scale, frameworks like SAFe (Scaled Agile Framework) and LeSS (Large-Scale Scrum) offer structured approaches:

SAFe:

- Provides a comprehensive framework for enterprise-scale Agile

- Incorporates roles like Release Train Engineer and Product Management

- Organizes work into Agile Release Trains

- Emphasizes alignment across the organization

LeSS:

- Focuses on simplicity and minimal overhead

- Maintains a single Product Owner across multiple teams

- Encourages direct communication between teams and stakeholders

- Promotes system-wide retrospectives

Choose a framework that aligns with your organization’s culture and project needs, but be prepared to adapt it as necessary.

Balancing Agility with Structure

While Agile methodologies promote flexibility, large-scale projects still require some structure:

- Maintain a high-level roadmap to guide overall direction

- Use architectural runways to prepare for upcoming features

- Implement governance processes for key decisions

- Balance feature development with technical debt reduction

The goal is to create an environment that allows for rapid iteration while ensuring the project remains on track to meet its long-term objectives.

Future Trends in Managing Technical Complexity

Artificial Intelligence and Machine Learning

AI and ML are poised to revolutionize how we manage complex IT projects:

- Predictive analytics for more accurate project planning

- Automated code review and optimization

- Intelligent testing that focuses on high-risk areas

- AI-assisted decision making for resource allocation

As these technologies mature, they will become invaluable tools for managing complexity at scale.

Low-Code/No-Code Platforms

The rise of low-code and no-code platforms is changing the landscape of IT development:

- Faster prototyping and development cycles

- Empowerment of business users to create simple applications

- Reduction in the complexity of certain development tasks

- Freeing up skilled developers to focus on more complex challenges

While not a panacea, these platforms can significantly reduce complexity in certain areas of large-scale projects.

DevOps and Continuous Delivery

The DevOps movement continues to evolve, offering new ways to manage complexity:

- Automated infrastructure provisioning (Infrastructure as Code)

- Continuous deployment pipelines

- Monitoring and observability tools for complex systems

- Chaos engineering practices to improve system resilience

Embracing DevOps principles can lead to more stable, scalable, and manageable IT systems.

Case Study: Spotify’s Large-Scale Agile Transformation

Spotify, the popular music streaming service, provides an excellent example of managing technical complexity in a large-scale IT environment. As the company grew rapidly, it faced challenges in maintaining its agile culture and managing the increasing complexity of its product development process.

The Challenge

Spotify needed to scale its engineering organization while maintaining agility, fostering innovation, and managing the technical complexity of its growing platform. The company had to handle:

- Rapid growth in user base and feature set

- Increasing number of engineers and teams

- Need for consistent architecture and quality across teams

- Challenges in coordination and alignment between teams

The Solution: The Spotify Model

Spotify developed a unique organizational structure and set of practices, now known as the “Spotify Model,” to address these challenges:

- Squads: Small, cross-functional teams (6-12 people) responsible for specific features or components.

- Tribes: Collections of squads working in related areas.

- Chapters: Groups of people with similar skills across different squads.

- Guilds: Communities of interest that span the entire organization.

This structure allowed Spotify to:

- Maintain autonomy and agility at the squad level

- Ensure alignment and coordination at the tribe level

- Facilitate knowledge sharing and skill development through chapters and guilds

- Scale its engineering organization while managing technical complexity

Key Practices

- Autonomous squads: Each squad has end-to-end responsibility for the features they develop. This includes design, development, testing, and deployment.

- Alignment: Tribes ensure that squads are working towards common goals. Regular tribe meetings and planning sessions help maintain this alignment.

- Loose coupling, tight alignment: Teams are given freedom in how they work, but align on what to build. This balance allows for innovation while maintaining overall product coherence.

- Continuous improvement: Regular retrospectives and experimentation to evolve practices. This includes both team-level and organization-wide improvements.

Technical Practices

In addition to organizational practices, Spotify implemented several technical strategies to manage complexity:

- Microservices architecture: Spotify adopted a microservices approach, allowing teams to develop and deploy services independently.

- Continuous delivery: Implementing robust CI/CD pipelines to enable frequent, reliable releases.

- Data-driven decision making: Using extensive data analytics to inform both technical and product decisions.

- Open source contributions: Encouraging teams to contribute to and use open source projects, fostering innovation and community engagement.

Results

Spotify’s approach allowed them to:

- Scale from a small startup to a global company with over 200 million users

- Maintain a culture of innovation and rapid product development

- Manage the technical complexity of a large-scale, distributed system

- Attract and retain top engineering talent

While the Spotify Model isn’t a one-size-fits-all solution, it demonstrates how innovative organizational and technical practices can help manage complexity in large-scale IT projects.

Conclusion:

Managing technical complexity in large-scale IT product development projects is a multifaceted challenge that requires a holistic approach. By focusing on clear planning, modular architecture, effective team management, rigorous quality assurance, and continuous adaptation, organizations can navigate the complexities of these projects successfully.

Key takeaways include:

- Break down complexity through modular design and clear project structuring

- Prioritize scalability and future-proofing in architectural decisions

- Implement

Struggling with technical complexity in your large-scale IT projects? LogicLoom is your ideal partner. Our expert team specializes in transforming complex IT initiatives into streamlined successes. From architectural design to agile methodologies, we’ve got you covered. Don’t let complexity hold you back – let’s conquer it together.

Ready to simplify your IT product development?

Contact LogicLoom at Hi@logicloom.in and let’s turn your complex vision into reality.