Cloud-Native Product Development: Leveraging AWS, Azure, and GCP for Scalable Solutions

Today’s digital landscape demands that businesses constantly innovate, scale, and deliver products with greater speed and efficiency. Cloud-native product development offers a transformative approach to achieving this, allowing organizations to create applications that are not only scalable and resilient but also adaptable to changing market conditions. By harnessing the advanced capabilities of cloud platforms such as AWS, Azure, and GCP, businesses can streamline their development processes, reduce operational costs, and improve product quality. This shift enables companies to focus more on innovation, delivering faster updates and meeting customer needs more effectively in a rapidly evolving environment. EKS simplifies running Kubernetes on AWS by automating tasks like scaling, patching, and monitoring. It allows businesses to deploy microservices-based applications with ease, leveraging Kubernetes’ powerful orchestration capabilities while maintaining full integration with AWS services. Lambda enables developers to execute code in response to events without managing infrastructure, supporting event-driven applications at scale. It’s highly scalable and cost-efficient, automatically scaling based on the number of requests, making it perfect for unpredictable workloads. DynamoDB is a fully managed NoSQL database that supports high-speed, low-latency data access. It’s built to scale automatically to handle large volumes of traffic, making it ideal for applications requiring rapid read/write operations with low response times. API Gateway helps developers create, deploy, and manage APIs at scale, acting as a bridge between backend services and external applications. It handles tasks such as authorization, throttling, and monitoring, ensuring secure and efficient API performance even under heavy load. CloudFormation lets you automate the provisioning of AWS resources using template files, which define your cloud infrastructure as code. This ensures consistent environments and simplifies infrastructure management, allowing teams to focus on development rather than operations. AKS provides a managed Kubernetes environment in Azure, allowing you to deploy and scale containerized applications effortlessly. It also integrates with Azure Active Directory for enhanced security, making it easy to manage and monitor complex microservices architectures. Azure Functions lets you build event-driven, serverless applications that scale automatically as your workloads grow. This platform supports multiple programming languages and integrates with a variety of services, allowing you to build scalable solutions with minimal infrastructure management. Cosmos DB provides a globally distributed database solution with built-in replication, offering guaranteed low latency and high availability. Its multi-model support allows developers to use familiar APIs and tools, making it versatile for various use cases across industries. Azure API Management enables secure and scalable API usage, making it easy to share services across internal teams or external partners. It includes built-in traffic management and API versioning, ensuring consistent performance and reducing integration complexity. ARM templates provide a declarative way to define and deploy Azure resources. By treating infrastructure as code, you can automate the deployment process, ensuring consistent environments and reducing the risk of manual errors during provisioning. GKE offers a managed Kubernetes service that leverages Google’s deep expertise in container orchestration. It automates cluster scaling, health checks, and upgrades, making it easier for teams to manage large-scale containerized workloads with minimal overhead. Cloud Functions is GCP’s serverless platform, enabling you to run lightweight, event-driven functions at scale. It supports a variety of programming languages and integrates seamlessly with GCP’s vast ecosystem of services, allowing you to build highly scalable applications quickly. Firestore provides a NoSQL database with real-time synchronization, enabling you to build responsive applications that work offline. Its scalability makes it ideal for global applications, and it integrates with Firebase, offering seamless development across web and mobile platforms. Apigee provides a complete solution for managing the lifecycle of your APIs. It offers features such as traffic management, version control, and developer portals, helping you build secure, scalable, and well-documented APIs for both internal and external use. Deployment Manager allows you to define your cloud infrastructure using YAML templates, enabling you to manage resources as code. This ensures that deployments are version-controlled, auditable, and repeatable, simplifying the management of complex cloud environments. Let’s explore some real-world examples of organizations that have successfully leveraged cloud-native development to drive innovation and growth: While cloud-native development offers numerous benefits, it also comes with its own set of challenges: As cloud technologies continue to evolve, we can expect several trends to shape the future of cloud-native product development: Cloud-native product development has revolutionized the software industry, offering unprecedented scalability, agility, and resilience. By leveraging powerful platforms like AWS, Azure, and GCP, businesses can create cost-effective solutions that drive innovation and enhance customer experiences. The adoption of microservices, containerization, serverless computing, and DevOps practices enables organizations to build flexible applications that evolve with their needs. While challenges such as increased complexity and new security considerations exist, the benefits far outweigh the obstacles. As we look to the future, trends like multi-cloud strategies, edge computing, and AI integration promise to further enhance cloud-native capabilities. Organizations that embrace this approach will be well-positioned to thrive in our increasingly digital world, meeting current customer needs while future-proofing their applications for tomorrow’s challenges and opportunities. Ready to take your business to the next level with cloud-native solutions? At LogicLoom, we specialize in developing cloud-native products using top platforms like AWS, Azure, and GCP. Our expertise ensures that your business can leverage these cloud services to build scalable, resilient applications optimized for growth and innovation. Whether you’re looking for seamless scalability or improved operational efficiency, our cloud-native approach is designed to accelerate your digital transformation. Contact us today at Hi@logicloom.in to start your cloud-native journey!The Benefits of Cloud-Native Architecture

Key Components of Cloud-Native Development

Microservices divide applications into small, independent units that can be scaled or updated individually. This architecture allows teams to work concurrently on different parts of an app, speeding up development and reducing interdependencies. Services communicate through APIs, allowing for a flexible, scalable system.

Containers provide a consistent runtime environment, ensuring that applications run the same way across development, testing, and production. This minimizes environment-related issues and increases portability. They also make it easy to scale individual services without affecting the rest of the application.

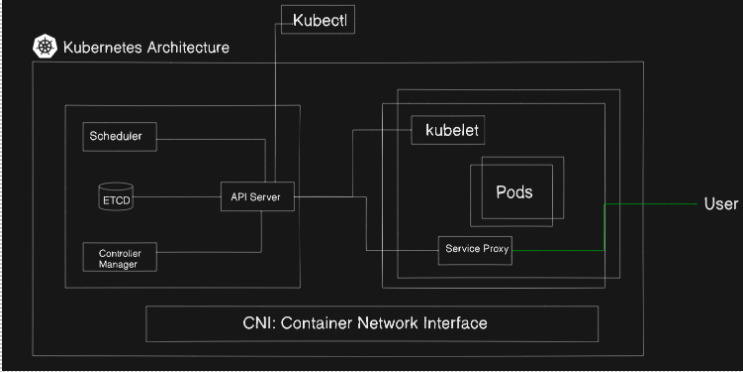

Orchestration platforms like Kubernetes automate the scaling and management of containerized applications. They dynamically adjust resources based on traffic demands and monitor health, ensuring availability. These platforms can also distribute workloads across multiple nodes to prevent single points of failure.

With serverless platforms, developers focus on writing code, while the platform handles infrastructure scaling automatically. This reduces operational overhead and optimizes costs, as you only pay for the exact resources used during function execution, rather than for pre-allocated capacity.

DevOps bridges the gap between development and operations teams, fostering a culture of collaboration. CI/CD automates testing, deployment, and monitoring, reducing manual intervention and enabling frequent, reliable releases. Together, they improve software quality and speed up delivery cycles.

IaC allows developers to manage and provision infrastructure through machine-readable scripts, reducing manual configurations. This approach ensures consistency across environments, supports version control, and enables automated, repeatable deployments, reducing human error and enhancing system reliability.Leveraging AWS for Cloud-Native Solutions

Amazon Elastic Kubernetes Service (EKS):

AWS Lambda:

Amazon DynamoDB:

Amazon API Gateway:

AWS CloudFormation:

Harnessing the Power of Microsoft Azure

Azure Kubernetes Service (AKS):

Azure Functions:

Azure Cosmos DB:

Azure API Management:

Azure Resource Manager (ARM) Templates:

Utilizing Google Cloud Platform (GCP)

Google Kubernetes Engine (GKE):

Cloud Functions:

Cloud Firestore:

Apigee API Management:

Cloud Deployment Manager:

Best Practices for Cloud-Native Product Development

Case Studies: Real-World Success Stories

Netflix, the world’s leading streaming service, migrated its entire infrastructure to AWS to support its rapid global expansion. By leveraging AWS services like EC2, S3, and DynamoDB, Netflix built a highly scalable and resilient platform that serves millions of users worldwide.

Key achievements:

• Scaled to support over 200 million subscribers globally

• Reduced video startup times by 70%

• Achieved 99.99% availability for streaming services

Spotify, the popular music streaming platform, migrated its infrastructure from on-premises data centers to Google Cloud Platform. This move enabled Spotify to scale its services more efficiently and leverage GCP’s advanced data analytics capabilities.

Key achievements:

• Reduced latency for users worldwide

• Improved data-driven decision making with BigQuery

• Accelerated feature development and deployment

Zulily, an e-commerce company, leveraged Microsoft Azure to build a cloud-native platform that could handle its rapid growth and daily flash sales. By using services like Azure Kubernetes Service and Cosmos DB, Zulily created a scalable and responsive shopping experience for its customers.

Key achievements:

• Supported 100x traffic spikes during flash sales

• Reduced infrastructure costs by 40%

• Improved developer productivity and time-to-marketChallenges and Considerations

To address these challenges, organizations should invest in training, adopt cloud-agnostic technologies where possible, implement robust security practices, and continuously monitor and optimize their cloud usage.The Future of Cloud-Native Development

By staying abreast of these trends and continuously adapting their strategies, organizations can position themselves to take full advantage of cloud-native product development and drive innovation in their respective industries.Conclusion:

Unlock the Power of Cloud-Native Development with LogicLoom