Microservices architecture has gained popularity due to its scalability, flexibility, and resilience. Kubernetes, an open-source container orchestration platform, provides powerful tools for deploying and managing microservices at a scale. In this guide, we’ll walk through the process of deploying a microservices-based application on Kubernetes using PostgreSQL as the database. By following this step-by-step tutorial, readers will be able to deploy their own projects seamlessly.

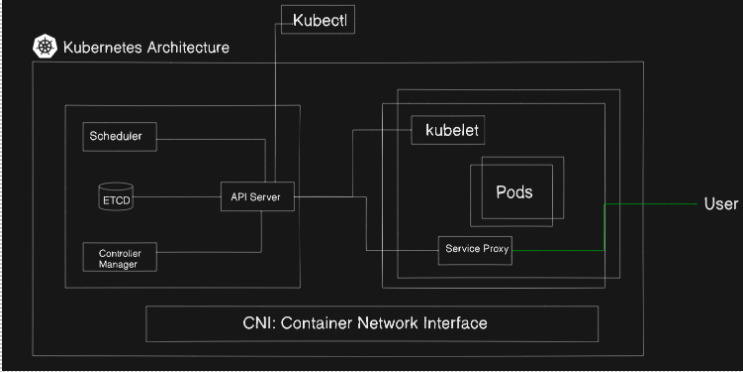

The architecture of Kubernetes comprises several key components, each playing a vital role in managing and orchestrating containerized workloads. Here are the main components of Kubernetes architecture:

Master Node:

- API Server: The Kubernetes API server is a central component that acts as a frontend for the Kubernetes control plane. It exposes the Kubernetes API, which serves as the primary interface for managing and interacting with the Kubernetes cluster. The API server handles all API requests, including creating, updating, and deleting resources like pods, services, deployments, and more.

- Scheduler: The scheduler is responsible for assigning pods to nodes based on resource requirements, quality of service requirements, and other constraints specified in the pod specification (PodSpec). It ensures optimal resource utilization and workload distribution across the cluster by considering factors like available resources, node affinity, and anti-affinity rules.

- Controller Manager: The controller manager is a collection of control loops that continuously monitor the cluster’s state and reconcile it with the desired state defined in the Kubernetes resource objects. Each controller within the controller manager is responsible for managing a specific type of resource, such as nodes, pods, services, replication controllers, and endpoints. For example, the node controller ensures that the desired number of nodes are running and healthy, while the replication controller maintains the desired number of pod replicas.

- etcd: etcd is a distributed key-value store that serves as the cluster’s database, storing configuration data, state information, and metadata about the Kubernetes cluster. It provides a reliable and consistent data store that allows Kubernetes components to maintain a shared understanding of the cluster’s state. etcd is highly available and resilient, using a leader-election mechanism and data replication to ensure data consistency and fault tolerance.

Node (Worker Node):

- Kubelet: The kubelet is an agent that runs on each node in the Kubernetes cluster and is responsible for managing pods and containers on the node. It receives pod specifications (PodSpecs) from the API server and ensures that the containers described in the PodSpecs are running and healthy on the node. The kubelet communicates with the container runtime (e.g., Docker, containerd) to start, stop, and monitor containers, and reports the node’s status and resource usage back to the API server.

- Kube-proxy: The kube-proxy is a network proxy that runs on each node and maintains network rules and services on the node. It implements the Kubernetes Service concept, which provides a way to expose a set of pods as a network service with a stable IP address and DNS name. The kube-proxy handles tasks such as load balancing, connection forwarding, and service discovery, ensuring that incoming network traffic is properly routed to the correct pods.

- Container Runtime: The container runtime is the software responsible for running containers on the node. Kubernetes supports multiple container runtimes, including Docker, containerd, cri-o, and others. The container runtime pulls container images from a container registry, creates and manages container instances based on those images, and provides an interface for interacting with the underlying operating system’s kernel to isolate and manage container resources.

Understanding Microservices Architecture:

Microservices architecture deconstructs monolithic applications into smaller, self-contained services. Each service has its well-defined boundaries, database (optional), and communication protocols. This approach fosters:

- Loose coupling: Microservices interact with each other through well-defined APIs, minimizing dependencies and promoting independent development.

- Independent deployment: Services can be deployed, scaled, and updated independently without affecting the entire application, streamlining maintenance and innovation.

- Separate databases: Services can leverage their own databases (relational, NoSQL, etc.) based on their specific needs, enhancing data management flexibility.

Setting up Kubernetes cluster:

We can set up Kubernetes cluster using tools like Minikube, kubeadm, or cloud providers like AWS EKS, Google GKE, or Azure AKS.

Project Overview:

Project Name: Microservices E-commerce Platform

Description: A scalable e-commerce platform built using microservices architecture, allowing users to browse products, add them to the cart, and place orders.

Architecture:

- Frontend Service: A frontend service built with Angular or React, serving as the user interface. It communicates with backend services via RESTful APIs.

- Authentication Service: Manages user authentication and authorization, provides endpoints for user registration, login, and token generation. Implemented using Node.js.

- Product Service: Handles product-related operations such as listing products, fetching product details, and searching products. Implemented using Node.js and Express.js, backed by a database like PostgreSQL.

- Cart Service: Manages user shopping carts, allows users to add, update, and remove items from their carts. Implemented using Node.js, integrated with a caching mechanism for performance.

- Order Service: Handles order creation, order retrieval, and order processing. Stores order information in a database and integrates with external payment gateways for payment processing.

Deployment Configuration:

- Dockerization: Each microservice is containerized using Docker, ensuring consistency and portability across environments.

- Kubernetes Deployment: Kubernetes manifests (YAML files) are created for each microservice, defining deployments, services, persistent volume and persistent volume claims.

Pre-requisites:

- A Kubernetes Cluster: You’ll need a Kubernetes cluster to deploy your microservices. Several options exist, including setting up your own cluster using tools like Minikube or kubeadm, or leveraging managed Kubernetes services offered by cloud providers (AWS EKS, Google GKE, Azure AKS). Refer to the official Kubernetes documentation for detailed setup instructions based on your chosen approach.

- Dockerized Microservices: Each microservice within your application should be containerized using Docker. This ensures consistent packaging and simplifies deployment across environments. Create a Dockerfile specific to your programming language and application requirements.

Dockerfile:

# Use an official Node.js runtime as the base image

FROM node:14

# Set the working directory inside the container

WORKDIR /usr/src/app

# Copy package.json and package-lock.json files to the working directory

COPY package*.json ./

# Install dependencies

RUN npm install

# Copy the rest of the application code to the working directory

COPY . .

# Expose the port on which the Node.js application will run

EXPOSE 3000

# Command to run the application

CMD ["node", "app.js"]

To create a Docker image, run the following command:

docker build -t micro .

Deployment Commands:

- Apply Configuration:

kubectl apply -f your_configuration.yaml

- List Resources:

- Pods:

kubectl get pods

- Deployments:

kubectl get deployments

- Services:

kubectl get services

- PersistentVolumeClaims:

kubectl get persistentvolumeclaims

- Describe Resource:

kubectl describe <resource_type> <resource_name>

- Watch Resources:

kubectl get <resource_type> --watch

- Delete Resource:

kubectl delete <resource_type> <resource_name>

- Delete All Resources from a Configuration File:

kubectl delete -f your_configuration.yaml

- Scale Deployment:

kubectl scale deployment <deployment_name> --replicas=<number_of_replicas>

- Port Forwarding:

kubectl port-forward <pod_name> <local_port>:<remote_port>

- Logs:

kubectl logs <pod_name>

- Exec into a Pod:

kubectl exec -it <pod_name> -- /bin/bash

- See Present Nodes:

kubectl get nodes

- Check Errors in File:

kubectl apply -f deployment.yml --dry-run=client

kubectl apply -f service.yml --dry-run=client

Conclusion:

E-commerce with Microservices Platform creates scalable, adaptable, and robust e-commerce systems by utilizing Kubernetes and microservices architecture. Through Docker containerization and Kubernetes deployment, the platform accomplishes:

- Scalability: Every element has the capacity to grow on its own to satisfy demand.

- Flexibility: Various technologies can be used by developers for each service.

- Resilience: The platform as a whole is not impacted when a single component fails.

- Portability: The system can function without a hitch in a variety of settings.

- Efficiency: Kubernetes minimizes manual labor by automating deployment and management processes.

This methodology guarantees the platform’s ability to adjust to evolving requirements, innovate promptly, and provide users with outstanding experiences.